If Google can’t index your pages, they won’t rank. Website indexability determines whether your content appears in search results at all. Without proper indexing, even the best content generates zero organic traffic.

This technical SEO fundamental separates visible websites from invisible ones. Many businesses lose thousands of potential visitors monthly because search engines simply can’t add their pages to the index.

This guide covers everything you need to know about indexability: what it means, why it matters, common problems, and exactly how to fix them for sustainable organic growth.

What Is Website Indexability?

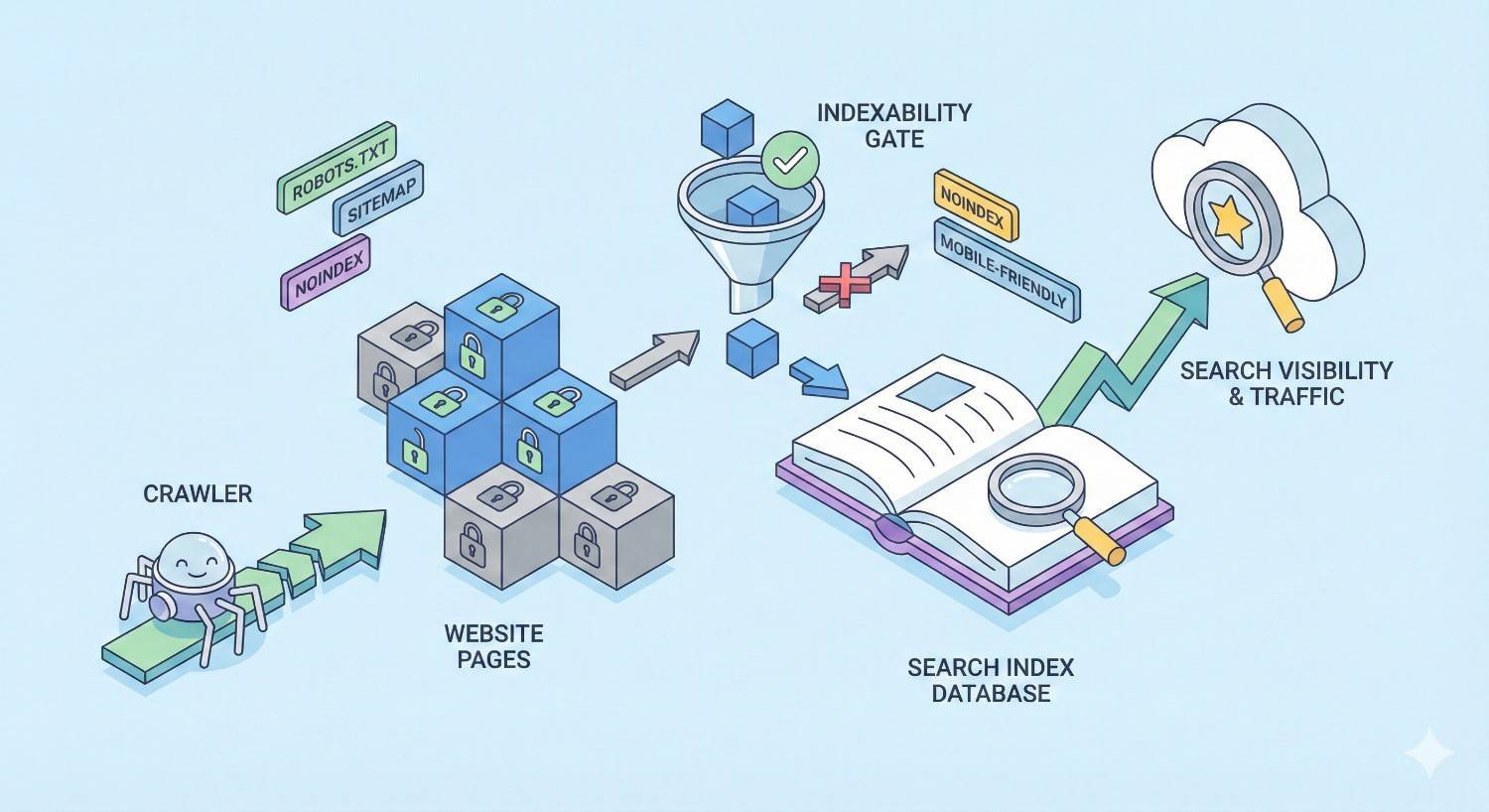

Understanding indexability starts with recognizing its role in the search ecosystem. Before any page can rank for keywords or drive organic traffic, search engines must first discover it, evaluate it, and store it in their database.

Definition of Indexability in SEO

Indexability refers to a webpage’s ability to be added to a search engine’s index. The index is essentially a massive database containing information about billions of web pages that search engines reference when processing queries.

A page is considered indexable when it meets all technical requirements that allow search engine bots to store it in their database. This includes having proper server responses, no blocking directives, and sufficient content quality.

When a page lacks indexability, it becomes invisible to search engines. No matter how valuable the content or how perfectly optimized the keywords, non-indexed pages cannot appear in search results.

How Search Engines Discover and Index Pages

Search engines use automated programs called crawlers or spiders to discover web pages. Googlebot, for example, follows links from page to page across the internet, collecting information about each URL it encounters.

The discovery process begins when crawlers find links pointing to your pages. These links might come from other websites, your XML sitemap, or internal links within your own site.

Once discovered, crawlers analyze the page content, evaluate its quality, and determine whether it should be added to the index. This evaluation considers factors like content uniqueness, technical accessibility, and overall page quality.

Indexability vs. Crawlability: Key Differences

Crawlability and indexability are related but distinct concepts. Crawlability refers to whether search engine bots can access and read your pages. Indexability determines whether those pages get stored in the search index.

A page can be crawlable but not indexable. For example, if Googlebot can access a page but finds a noindex tag, it will crawl the content but won’t add it to the index.

Think of crawlability as the ability to enter a library and read books. Indexability is whether those books get catalogued and made available for others to find. Both must work together for pages to appear in search results.

Why Website Indexability Matters for Organic Visibility

Indexability forms the foundation of all organic search performance. Without it, every other SEO effort becomes meaningless. Understanding this connection helps prioritize technical SEO investments.

The Direct Link Between Indexing and Rankings

The relationship is straightforward: unindexed pages cannot rank. Search engines only return results from their index, so pages outside that database never appear for any query.

This creates a binary situation. Either your page exists in the index and has ranking potential, or it doesn’t exist and has zero chance of generating organic traffic.

Even partial indexing problems cause significant issues. If Google indexes only 60% of your site, 40% of your content has no opportunity to attract search visitors regardless of its quality.

How Poor Indexability Impacts Traffic and Revenue

Indexability problems directly translate to lost business opportunities. Every non-indexed page represents potential customers who will never find your content through search.

Consider an e-commerce site with 10,000 product pages where 2,000 aren’t indexed. Those 2,000 products are essentially invisible to organic search traffic. If each product page could generate even modest traffic, the cumulative loss becomes substantial.

Service businesses face similar challenges. Blog posts, service pages, and location pages that aren’t indexed can’t attract the leads they were created to generate. The content investment yields no return.

Indexability as a Foundation for SEO Success

Technical SEO, content strategy, and link building all depend on proper indexability. These efforts only produce results when pages can actually appear in search results.

Building links to non-indexed pages wastes resources. Creating content that search engines can’t store provides no organic value. Optimizing keywords on invisible pages accomplishes nothing.

Smart SEO strategy addresses indexability first. Once pages are properly indexed, other optimization efforts can compound and generate returns. Without this foundation, everything else becomes ineffective.

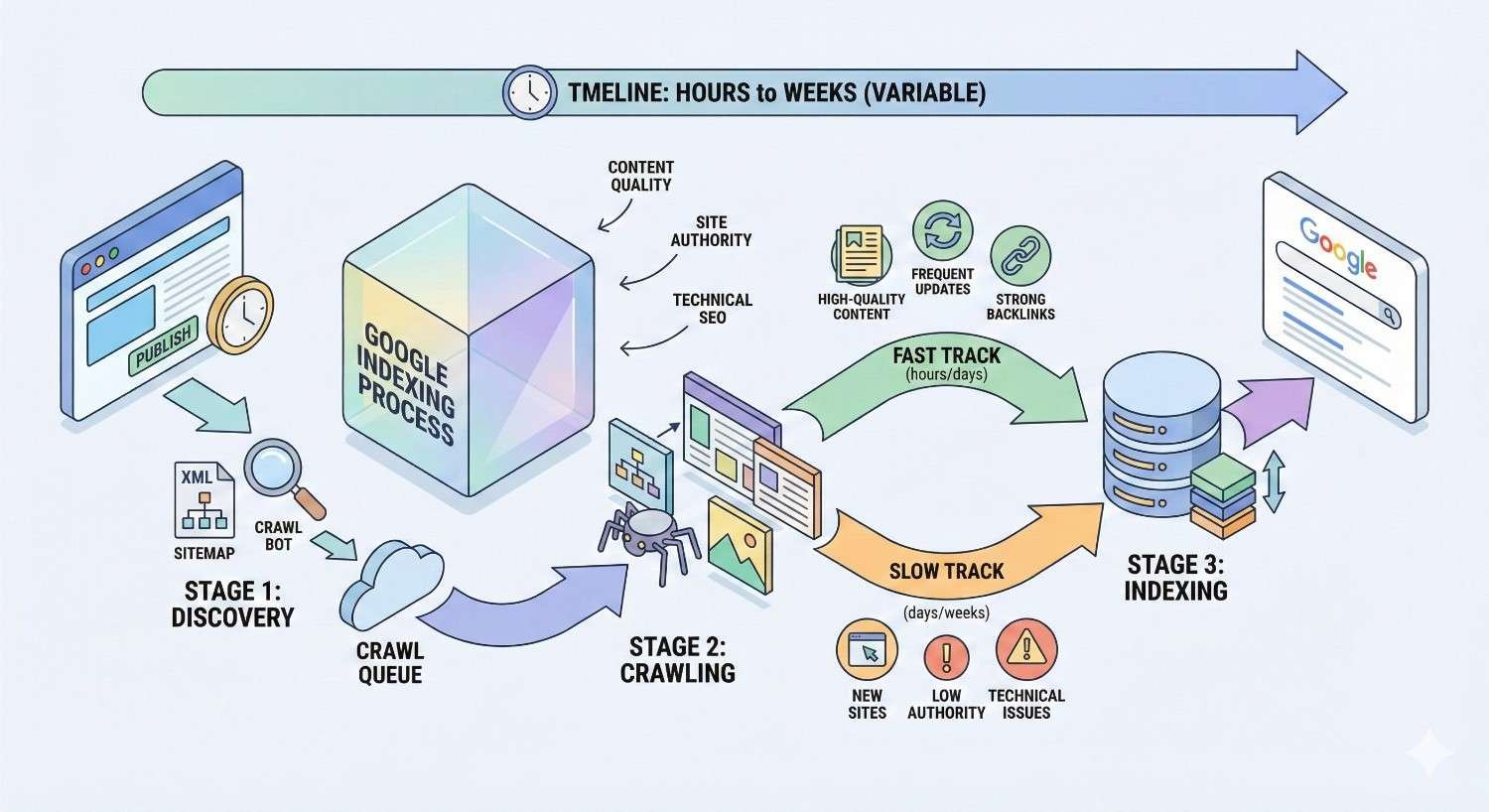

How Search Engines Index Your Website

Understanding the indexing process helps diagnose problems and optimize for better coverage. Search engines follow a systematic approach to discovering, evaluating, and storing web content.

The Crawling Process Explained

Crawling begins when search engine bots request your pages through HTTP requests. These bots follow links, read content, and collect data about each URL they visit.

Googlebot maintains a crawl queue containing URLs it plans to visit. This queue gets populated through various discovery methods including sitemaps, external links, and previously crawled pages on your site.

During crawling, bots download page content, parse HTML, and extract information. They identify links to other pages, which get added to the crawl queue for future visits.

From Crawling to Indexing: What Happens Next

After crawling, search engines process the collected data. This processing phase analyzes content, evaluates quality, and determines indexing eligibility.

Google’s systems render JavaScript, extract text content, identify images, and understand page structure. This rendering process can take additional time, especially for JavaScript-heavy pages.

If the page passes quality thresholds and contains no blocking directives, it gets added to the index. The indexed version includes information about the page’s content, structure, and relevance signals.

How Google Decides What to Index

Google doesn’t index every page it crawls. The search engine evaluates pages against quality guidelines and makes selective decisions about what deserves index space.

Pages with thin content, duplicate information, or low value may be crawled but not indexed. Google’s systems prioritize unique, valuable content that serves user needs.

Technical factors also influence indexing decisions. Pages with proper canonical tags, clean URL structures, and fast load times have better indexing prospects than technically problematic pages.

Understanding Crawl Budget and Its Role

Crawl budget represents the resources Google allocates to crawling your site. Larger sites with more pages face greater crawl budget constraints than smaller sites.

Google determines crawl budget based on two factors: crawl rate limit and crawl demand. Crawl rate limit prevents server overload, while crawl demand reflects how much Google wants to crawl your content.

For sites with thousands of pages, crawl budget optimization becomes critical. Wasting crawl budget on low-value pages means important pages may not get crawled frequently enough to maintain fresh index coverage.

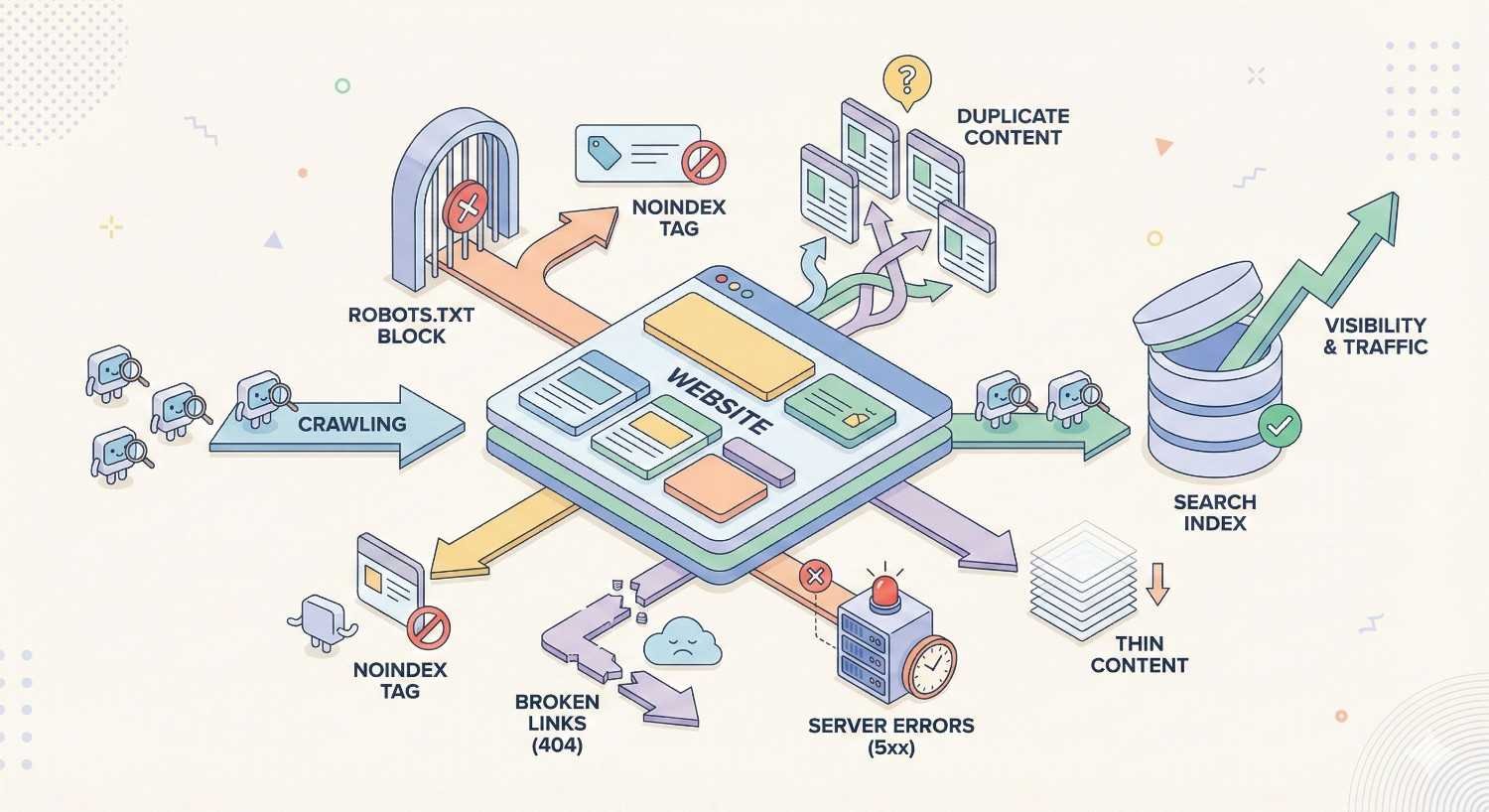

Common Website Indexability Issues

Multiple technical problems can prevent pages from being indexed. Identifying these issues is the first step toward resolving them and improving organic visibility.

Blocked Pages (Robots.txt Errors)

The robots.txt file tells search engines which pages they can and cannot crawl. Misconfigured rules can accidentally block important pages from being crawled and indexed.

Common mistakes include overly broad disallow rules that block entire directories or using wildcards that match unintended URLs. A single misplaced rule can hide thousands of pages from search engines.

Testing robots.txt changes before implementation prevents accidental blocking. Google Search Console’s robots.txt tester helps verify that important pages remain accessible to crawlers.

Noindex Tags Preventing Indexation

The noindex meta tag explicitly tells search engines not to index a page. While useful for keeping certain pages out of search results, accidental noindex tags cause serious problems.

These tags sometimes get added during development and forgotten before launch. Template-level noindex tags can affect entire sections of a site without anyone realizing the issue.

Noindex directives in HTTP headers create the same problem but are harder to detect since they don’t appear in page source code. Both locations need checking during indexability audits.

Duplicate Content and Canonicalization Problems

When multiple URLs contain identical or very similar content, search engines must choose which version to index. Without proper canonical tags, they may index the wrong version or none at all.

Duplicate content commonly occurs through URL parameters, www vs. non-www versions, HTTP vs. HTTPS, and trailing slashes. Each variation creates a separate URL that search engines might treat as a distinct page.

Proper canonical tag implementation tells search engines which URL represents the preferred version. This consolidates indexing signals and prevents dilution across duplicate URLs.

Orphan Pages and Poor Internal Linking

Orphan pages have no internal links pointing to them. Without these links, search engines struggle to discover and prioritize these pages for crawling and indexing.

Internal linking serves as a roadmap for crawlers. Pages with many internal links signal importance and get crawled more frequently. Orphan pages may never be discovered or may be deprioritized.

Site architecture should ensure every important page receives internal links from relevant pages. Navigation menus, contextual links, and footer links all contribute to internal link coverage.

Slow Page Speed and Server Errors

Server response time affects crawling efficiency. Slow servers force crawlers to wait longer for each page, reducing the number of pages they can crawl within their allocated time.

Server errors like 500 responses prevent crawling entirely. If crawlers consistently encounter errors when requesting pages, those pages won’t get indexed and may eventually be removed from the index.

Monitoring server performance and error rates helps identify problems before they impact indexing. Regular uptime monitoring catches issues that might otherwise go unnoticed.

JavaScript Rendering Issues

Modern websites often rely heavily on JavaScript to display content. Search engines must render this JavaScript to see the actual page content, which creates potential indexing challenges.

Google can render JavaScript, but the process takes additional resources and time. Content that depends on JavaScript rendering may be indexed more slowly or incompletely compared to server-rendered HTML.

Critical content should be available in the initial HTML response when possible. For JavaScript-dependent content, testing with Google’s URL Inspection tool verifies that rendered content matches expectations.

How to Check If Your Website Is Indexed

Regular indexing audits reveal problems before they significantly impact traffic. Multiple methods exist for checking index status, from simple searches to comprehensive tools.

Using Google Search Console for Index Status

Google Search Console provides the most authoritative data about your site’s index status. The Index Coverage report shows exactly how many pages Google has indexed and why others were excluded.

The report categorizes URLs into valid, excluded, and error states. Each category includes specific reasons explaining why pages fall into that classification.

URL Inspection tool allows checking individual pages. This tool shows whether a specific URL is indexed, when it was last crawled, and any issues Google detected during processing.

The “site:” Search Operator Method

Searching “site:yourdomain.com” in Google shows all indexed pages from your domain. This quick check provides a rough count and lets you browse what’s actually in the index.

Combining the site operator with specific queries helps find indexed pages on particular topics. For example, “site:yourdomain.com product” shows indexed pages containing “product.”

This method has limitations. The count shown is approximate, and not all indexed pages appear in site search results. Use it for quick checks, but rely on Search Console for accurate data.

Understanding Index Coverage Reports

The Index Coverage report in Search Console breaks down indexing status across your entire site. Understanding each status category helps prioritize fixes.

“Valid” pages are indexed and can appear in search results. “Valid with warnings” pages are indexed but have issues worth addressing. “Excluded” pages aren’t indexed, sometimes intentionally.

“Error” pages have problems preventing indexing. These require immediate attention since they represent pages you likely want indexed but that have technical barriers.

Third-Party Tools for Indexability Audits

SEO tools like Screaming Frog, Sitebulb, and Ahrefs provide additional indexability insights. These tools crawl your site similarly to search engines and identify potential issues.

Screaming Frog can detect noindex tags, canonical issues, and blocked pages across your entire site. The tool exports data for analysis and tracks changes over time.

Ahrefs and Semrush compare their index data against your sitemap to identify pages that should be indexed but aren’t. This comparison reveals gaps in coverage.

How to Improve Website Indexability

Fixing indexability issues requires systematic attention to technical details. Each improvement removes barriers between your content and search engine indexes.

Submitting and Optimizing Your XML Sitemap

XML sitemaps tell search engines about pages you want indexed. Submitting a sitemap through Search Console helps Google discover your content faster.

Effective sitemaps include only indexable pages. Remove URLs with noindex tags, redirects, or error responses. Keep sitemaps under 50,000 URLs and 50MB uncompressed.

Update sitemaps when adding new content. Dynamic sitemaps that automatically include new pages ensure search engines learn about fresh content quickly.

Fixing Robots.txt Configuration

Review robots.txt rules to ensure important pages aren’t blocked. Remove or modify overly broad disallow directives that prevent crawling of valuable content.

Test changes using Search Console’s robots.txt tester before implementing them. This verification prevents accidentally blocking pages you want indexed.

Consider what actually needs blocking. Most sites only need to block admin areas, duplicate parameter URLs, and internal search results. Everything else should remain crawlable.

Resolving Noindex and Canonical Issues

Audit your site for unintended noindex tags. Check both meta tags in HTML and X-Robots-Tag HTTP headers. Remove noindex directives from pages that should be indexed.

Review canonical tags to ensure they point to the correct URLs. Self-referencing canonicals on unique pages and proper canonicals on duplicate pages help search engines index the right versions.

Fix canonical chains where page A canonicals to page B, which canonicals to page C. Direct canonicals to the final preferred URL to avoid confusion.

Improving Internal Linking Structure

Create clear pathways from your homepage to all important pages. No page should require more than three clicks from the homepage to reach.

Add contextual internal links within content. When one page mentions a topic covered elsewhere on your site, link to that related page.

Review navigation menus to ensure key pages are accessible. Category pages, service pages, and important content should appear in main navigation or prominent footer links.

Enhancing Page Speed and Core Web Vitals

Faster pages get crawled more efficiently. Optimize images, enable compression, and leverage browser caching to reduce load times.

Core Web Vitals measure user experience factors that also affect crawling. Largest Contentful Paint, First Input Delay, and Cumulative Layout Shift all influence how search engines perceive your pages.

Monitor server response times. Aim for Time to First Byte under 200 milliseconds. Slow servers waste crawl budget and may prevent complete indexing.

Ensuring Mobile-Friendliness

Google uses mobile-first indexing, meaning it primarily uses the mobile version of pages for indexing and ranking. Mobile-unfriendly pages may face indexing challenges.

Test pages using Google’s Mobile-Friendly Test tool. Address issues like text too small to read, clickable elements too close together, and content wider than the screen.

Responsive design ensures content displays properly across all devices. This approach provides consistent content for both mobile and desktop crawlers.

Requesting Indexing in Google Search Console

The URL Inspection tool allows requesting indexing for specific pages. After fixing issues or publishing new content, use this feature to prompt Google to recrawl.

This request doesn’t guarantee immediate indexing. Google still evaluates the page and decides whether to index it. However, the request can speed up discovery of new or updated content.

Use indexing requests sparingly. The feature is designed for individual URLs, not bulk submissions. For many pages, sitemap submission is more appropriate.

How Long Does It Take for Google to Index a Page?

Indexing timeframes vary significantly based on multiple factors. Setting realistic expectations helps plan content strategies and avoid unnecessary concern.

Typical Indexing Timeframes

New pages on established sites often get indexed within days. Google’s crawlers regularly visit sites with strong crawl demand, discovering new content quickly.

For new websites, initial indexing may take weeks. Search engines need time to discover the site, evaluate its quality, and establish crawl patterns.

Some pages get indexed within hours of publication, especially on authoritative sites with frequent updates. Others may take months if the site has low authority or technical issues.

Factors That Speed Up or Delay Indexing

Site authority significantly impacts indexing speed. Established sites with strong backlink profiles and regular updates get crawled more frequently.

Internal linking to new pages helps crawlers discover them faster. Pages linked from the homepage or main navigation get found sooner than orphan pages.

Technical issues delay indexing. Slow servers, JavaScript rendering requirements, and crawl errors all extend the time between publication and indexing.

Setting Realistic Expectations for New Websites

New websites face the longest indexing delays. Without established authority or external links, search engines have little reason to prioritize crawling.

Building initial authority through quality content and legitimate link building accelerates indexing over time. The first few months typically show the slowest indexing speeds.

Patience is necessary, but passivity isn’t. Actively submit sitemaps, build internal links, and create content worth linking to. These efforts compound to improve indexing speed progressively.

What to Do When Google Won’t Index Your Pages

Persistent indexing problems require systematic diagnosis and resolution. Understanding why pages aren’t indexed guides effective solutions.

Diagnosing Indexing Problems

Start with Google Search Console’s URL Inspection tool. Enter the problematic URL to see Google’s perspective on why it isn’t indexed.

The tool reveals specific issues: noindex tags, canonical to another URL, crawl errors, or quality concerns. Each diagnosis points toward different solutions.

Check the Index Coverage report for patterns. If many pages share the same exclusion reason, a site-wide issue likely exists rather than individual page problems.

Step-by-Step Troubleshooting Guide

First, verify the page is crawlable. Check robots.txt rules and ensure no disallow directives block the URL.

Second, confirm no noindex directives exist. Check the meta robots tag in HTML and X-Robots-Tag in HTTP headers.

Third, review canonical tags. Ensure the page either has a self-referencing canonical or no canonical tag rather than pointing to a different URL.

Fourth, evaluate content quality. Thin content, duplicate content, or low-value pages may be crawled but not indexed. Improve content to meet quality thresholds.

Fifth, check for technical errors. Server errors, redirect loops, and timeout issues prevent successful crawling and indexing.

When to Seek Professional SEO Help

Some indexing problems require advanced technical expertise. JavaScript rendering issues, complex canonical situations, and large-scale crawl budget problems often benefit from professional analysis.

Consider professional help when internal troubleshooting hasn’t resolved issues after several weeks. Persistent problems usually indicate deeper technical challenges.

Professional SEO audits can identify issues that standard tools miss. Expert analysis often reveals interconnected problems that require coordinated solutions.

Website Indexability Best Practices Checklist

Use this checklist to maintain optimal indexability across your website:

Technical Foundation

- Robots.txt allows crawling of all important pages

- No unintended noindex tags on pages that should be indexed

- XML sitemap submitted to Google Search Console

- Sitemap contains only indexable URLs

- Server response times under 200ms TTFB

Content Accessibility

- All important pages reachable within 3 clicks from homepage

- No orphan pages lacking internal links

- Critical content available without JavaScript rendering

- Mobile-friendly design across all pages

- No duplicate content without proper canonicalization

Monitoring and Maintenance

- Weekly review of Index Coverage report

- Monthly crawl of site using SEO tools

- Immediate investigation of indexing drops

- Regular robots.txt and sitemap audits

- Server uptime monitoring active

Quality Signals

- Unique, valuable content on each page

- Proper canonical tags on all pages

- Clean URL structure without excessive parameters

- Fast page load times meeting Core Web Vitals

- No thin content pages diluting site quality

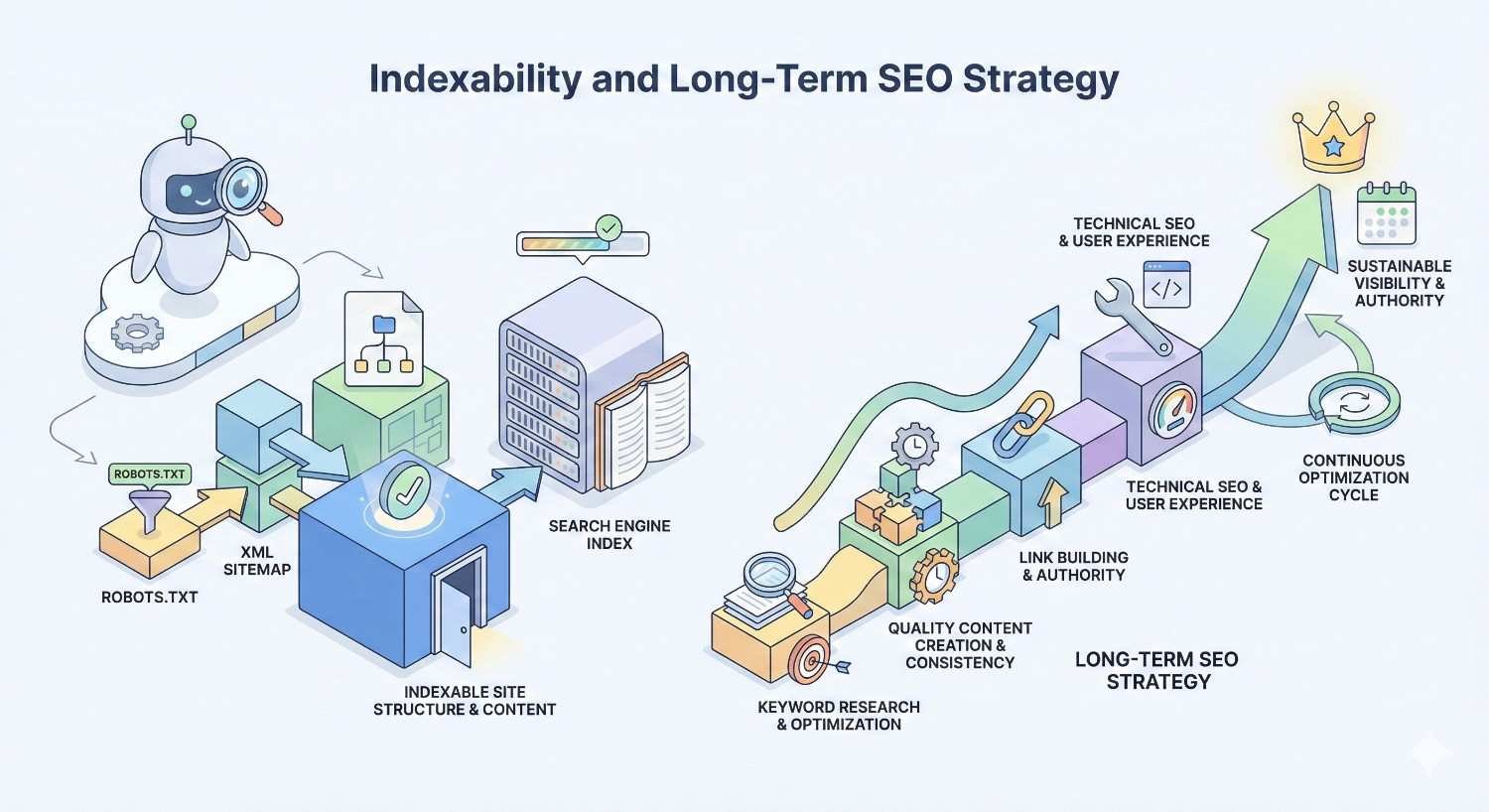

Indexability and Long-Term SEO Strategy

Indexability isn’t a one-time fix but an ongoing component of SEO success. Building sustainable organic growth requires continuous attention to technical foundations.

Building a Technically Sound Website Foundation

Technical SEO creates the infrastructure for all other optimization efforts. Without proper indexability, content and link building investments generate diminished returns.

Prioritize technical health during site development and redesigns. Addressing indexability from the start prevents problems that become harder to fix later.

Document technical standards for your site. Clear guidelines about canonical usage, robots.txt rules, and sitemap management prevent accidental indexability problems.

Ongoing Monitoring and Maintenance

Regular audits catch indexability issues before they significantly impact traffic. Monthly technical reviews identify problems while they’re still small.

Set up alerts for significant changes in indexed page counts. Sudden drops often indicate technical problems requiring immediate attention.

Track indexing metrics alongside traffic data. Correlating indexing changes with traffic changes helps quantify the impact of technical improvements.

How Indexability Fits Into Sustainable Organic Growth

Indexability enables compound growth. As you publish more content and earn more links, proper indexability ensures these investments translate into increased organic visibility.

Sites with strong technical foundations scale more effectively. Adding hundreds of new pages works only when those pages actually get indexed and can rank.

Long-term SEO success requires balancing content creation, link building, and technical maintenance. Neglecting any element limits overall growth potential.

Conclusion

Website indexability determines whether your pages can appear in search results. Without proper indexing, content investments generate zero organic returns, making this technical foundation essential for any SEO strategy.

At White Label SEO Service, we help businesses build technically sound websites that search engines can efficiently crawl and index. Our comprehensive approach addresses indexability alongside content strategy and authority building for sustainable organic growth.

Ready to ensure your website is fully indexed and visible to search engines? Contact our team for a technical SEO audit that identifies indexability issues and creates a clear path to improved organic performance.

Frequently Asked Questions About Website Indexability

What is the difference between indexed and crawled?

Crawled means a search engine bot visited and read your page. Indexed means the page was added to the search engine’s database and can appear in results. A page can be crawled but not indexed if it has quality issues or blocking directives.

Can a page rank without being indexed?

No, a page cannot rank without being indexed. Search engines only return results from their index. If your page isn’t in the index, it has zero chance of appearing for any search query.

How do I remove a page from Google’s index?

Add a noindex meta tag to the page or return a 404/410 status code. For faster removal, use the Remove URLs tool in Google Search Console. The page will be removed from search results within days.

Does indexability affect all search engines?

Yes, indexability principles apply to all major search engines including Google, Bing, and others. Each search engine has its own index, and pages must be indexed in each to appear in their respective results.

How often does Google re-index pages?

Re-indexing frequency varies by page. High-authority pages with frequent updates may be re-crawled daily. Lower-priority pages might only be re-crawled monthly. You can check last crawl dates in Google Search Console’s URL Inspection tool.

Why are my new pages not getting indexed?

Common causes include noindex tags, robots.txt blocking, poor internal linking, low domain authority, or content quality issues. Use Google Search Console’s URL Inspection tool to identify the specific reason for each page.

How many pages can Google index from my website?

Google has no official limit on pages per site. However, crawl budget constraints mean larger sites may not have all pages indexed. Focus on ensuring your most important pages are indexed rather than maximizing total indexed pages.